这篇文章试着分析了 Transformer 架构的可解释性

Transformer Overview

相比于原始 Transformer 的简化

关于 Transformer 架构的一些结论

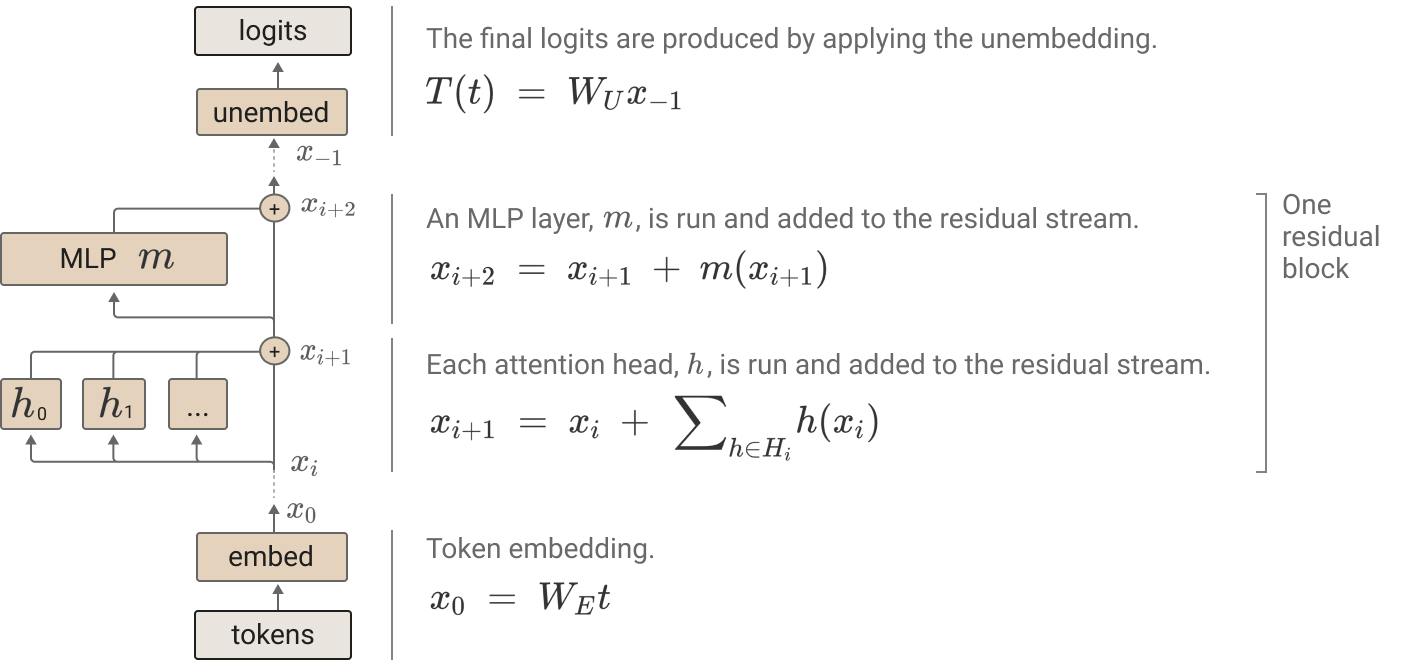

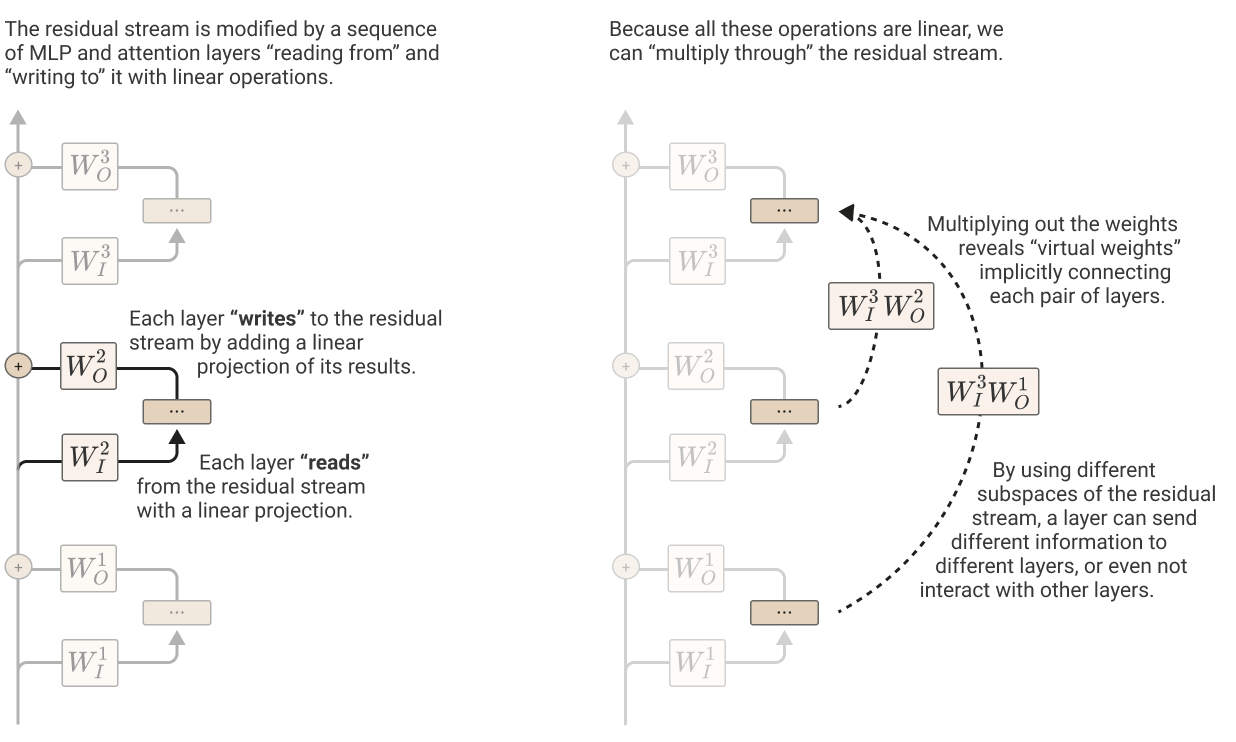

(A) The residual stream serves as a communication channel, allowing layers to interact without performing processing itself. Its deeply linear structure implies that it doesn’t have a “privileged basis” and can be rotated without changing model behavior.

(B) Virtual weights directly connect any pair of layers by multiplying out their interactions through the residual stream. These virtual weights describe how later layers read information written by previous layers.

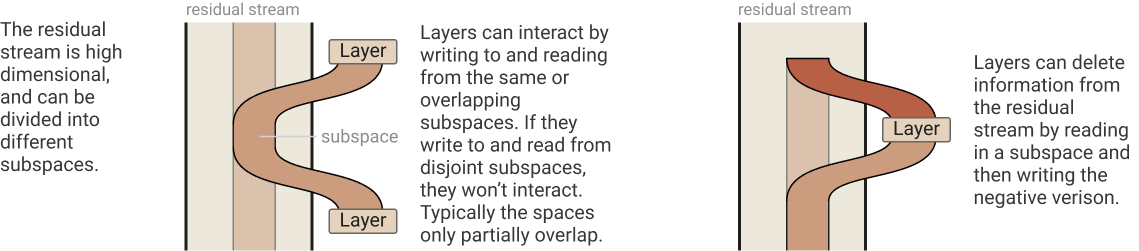

© Subspaces and Residual Stream Bandwidth: The residual stream is a high-dimensional vector space, allowing layers to store information in different subspaces. Dimensions of the residual stream act like “memory” or “bandwidth,” with high demand due to the large number of computational dimensions relative to residual stream dimensions. Some MLP neurons and attention heads may manage memory by clearing residual stream dimensions set by other layers.

(D) Attention Heads are Independent and Additive: Attention heads operate independently and add their output back into the residual stream. This independence allows for a clearer theoretical understanding of transformer layers.

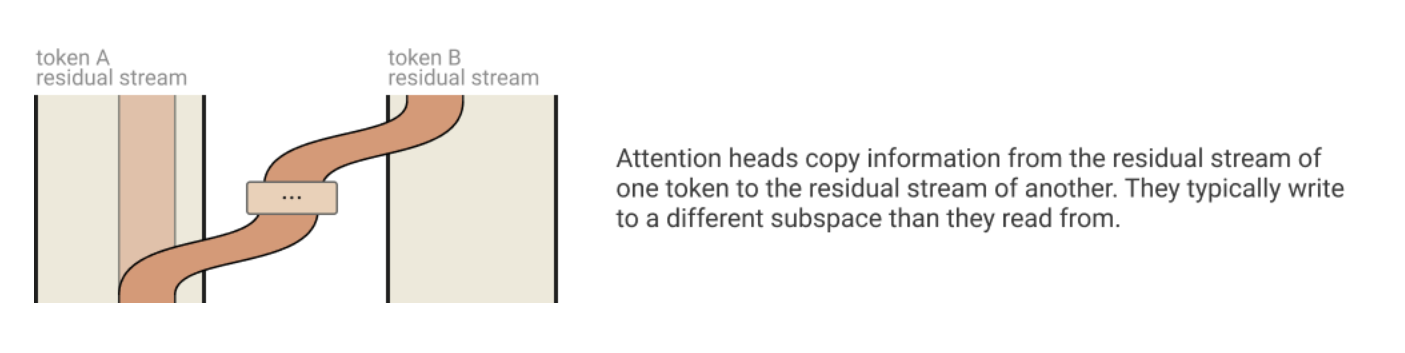

(E) Attention Heads as Information Movement:

- Attention heads move information from one token’s residual stream to another’s.

- The process involves two linear operations: the attention pattern ($A$) and the output-value matrix ($W_O W_V$).

- The attention pattern determines which token’s information is moved, while the output-value matrix determines what information is read and how it is written. These two processes are independent from each other.

(F) Other Observations about Attention Heads:

- Attention heads perform a linear operation if the attention pattern is fixed.

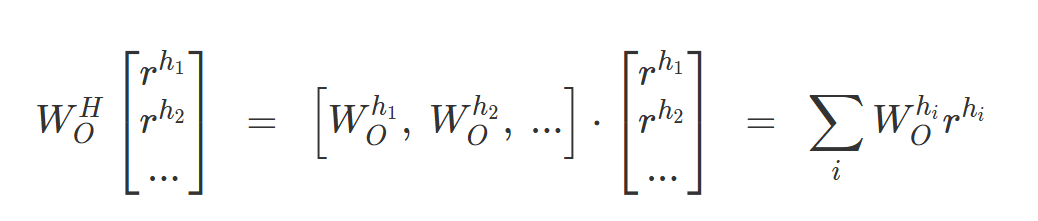

- The matrices $W_Q$ and $W_K$ always operate together, as do $W_O$ and $W_V$.

- Keys, queries, and value vectors are intermediary by-products of computing low-rank matrices.

- Combined matrices $W_{OV} = W_O W_V$ and $W_{QK} = W_Q^T W_K$ can be defined for simplicity.

- Products of attention heads behave much like attention heads themselves. (Attention heads across different layers can be combined. We call these virtual attention.)

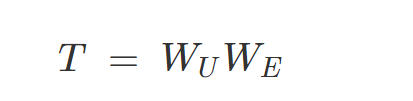

Zero-Layer Transformers

在语言模型中

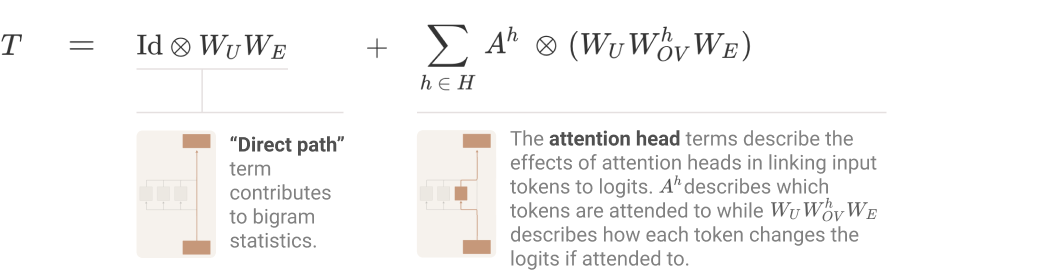

One-Layer Attn-Only Transformer

Formulation

- Effect of Source Token on Logits:

- If multiple destination tokens attend to the same source token with the same attention weight, the source token will influence the logits for the predicted output token in the same way for all those destination tokens.

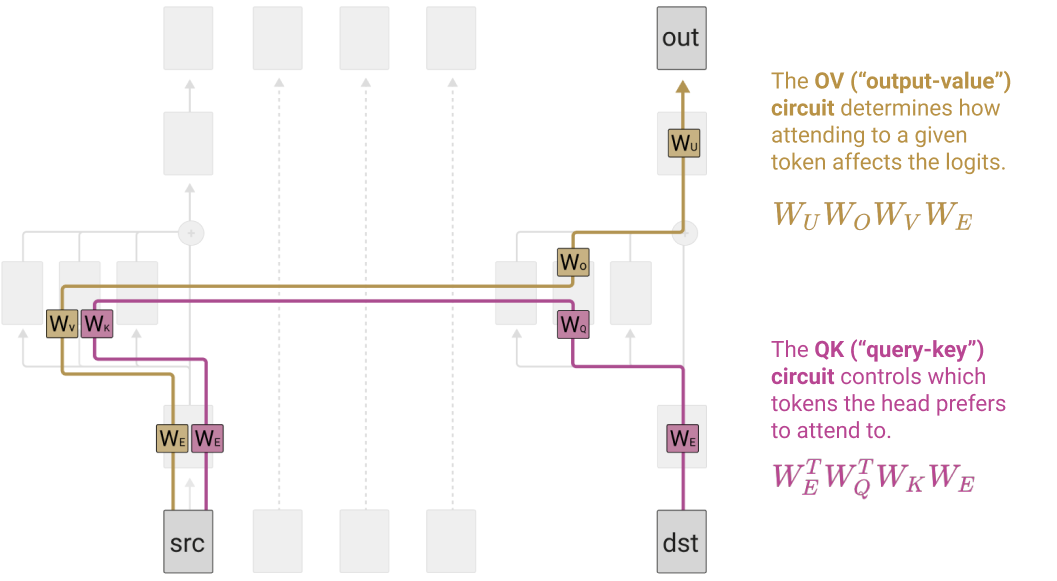

- Independent Consideration of OV and QK Circuits:

- It can be useful to think of the OV (Output-Value) and QK (Query-Key) circuits separately since they are individually understandable functions (linear or bilinear operations on matrices).

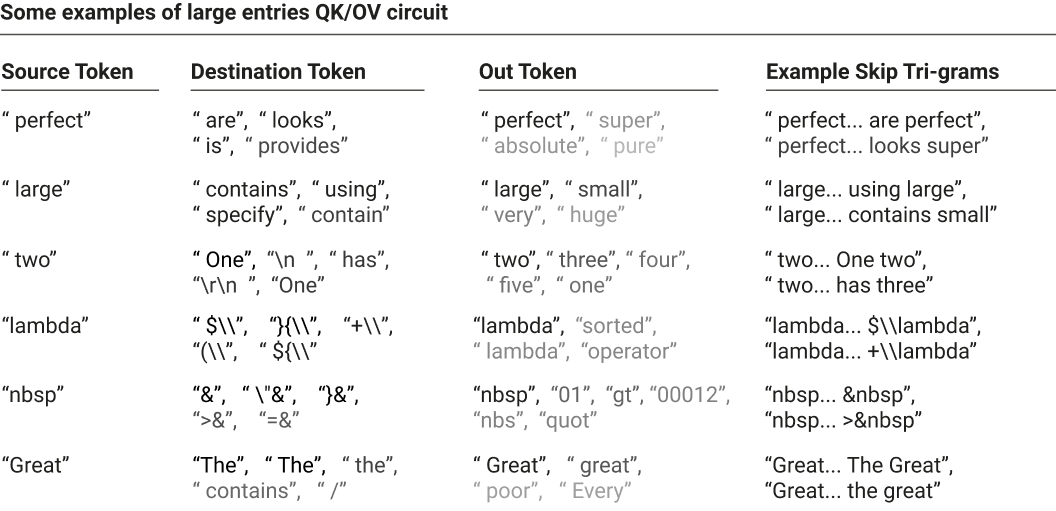

Skip-Trigrams

One-Layer Attn-Only Transformer 可以被解释为 Skip-Trigrams

- The QK circuit determines which “source” token

( ) ( ) - The OV circuit describes the effect on the “out” predictions for the next token.

- Together, they form a “skip-trigram” [source]… [destination] [out].

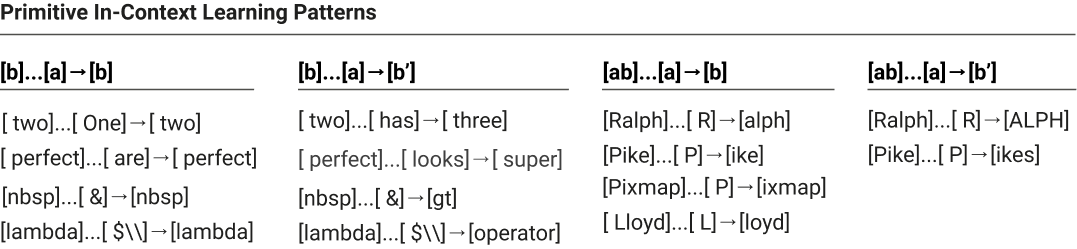

这使得 Copying and Primitive In-Context Learning 成为了可能

- Attention heads often dedicate capacity to copying tokens, increasing the probability of the token and similar tokens.

- Tokens are copied to plausible positions based on bigram statistics.

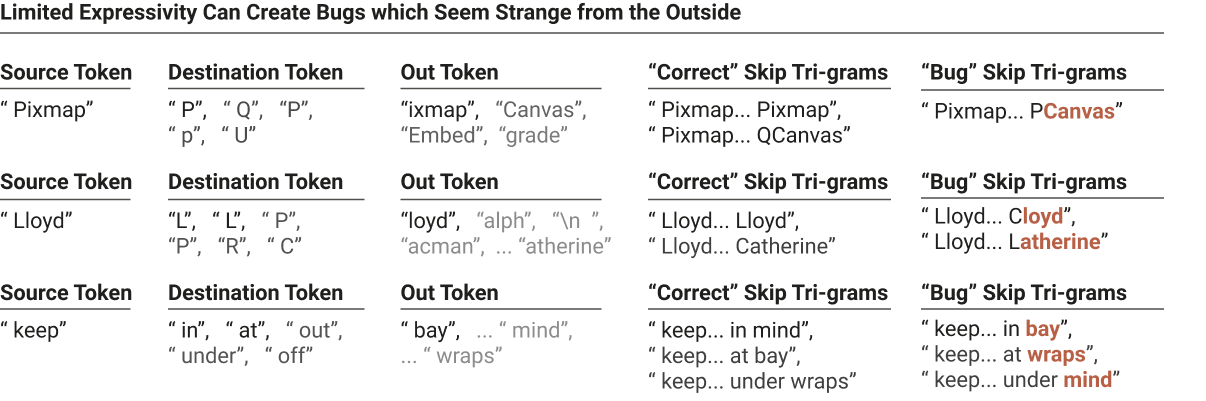

但是这种 Skip-Trigram 的建模方式也有问题

我们的单层模型以keep…in mind 和 keep…at bay 的概率keep…in bay 和 keep…at mind 的概率

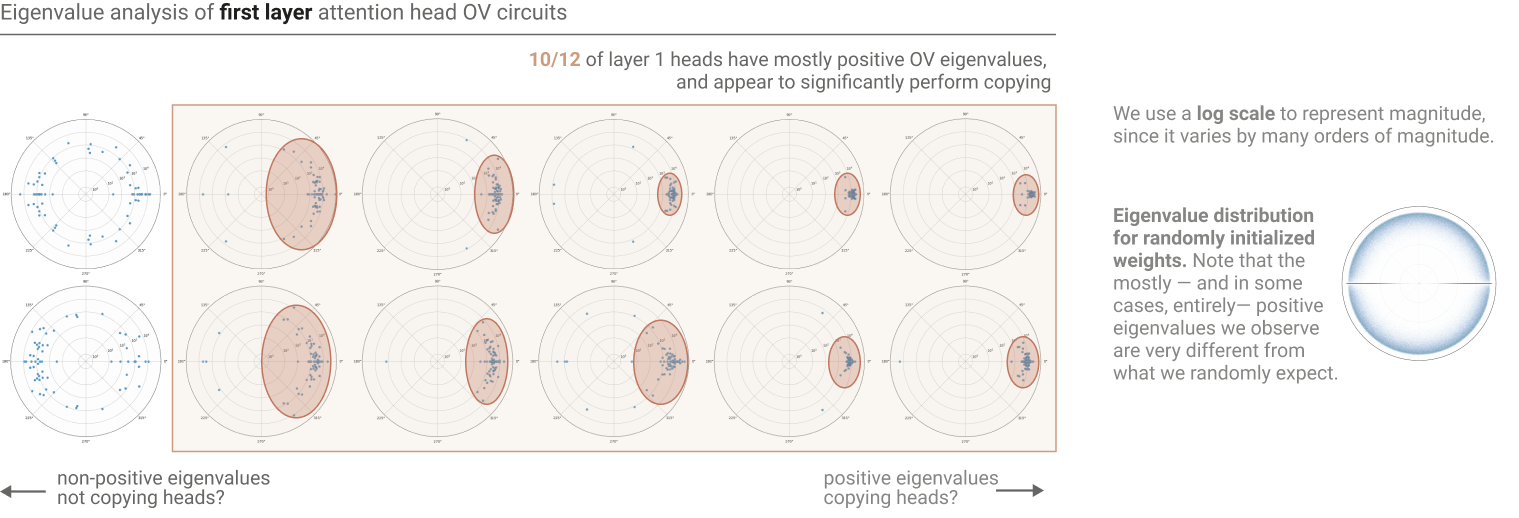

如何检测这种存在于 Attn Head 之中的复制行为

To detect copying behavior in transformer models, researchers focus on identifying matrices that map the same vector to itself, thus increasing the probability of a token recurring. This involves examining the eigenvalues and eigenvectors of the OV circuit matrices. If a matrix has positive eigenvalues, it suggests that a linear combination of tokens increases the logits of those same tokens, indicating copying behavior.

This method reveals that many attention heads possess positive eigenvalues, aligning with the copying structure. While positive eigenvalues are strong indicators of copying, they are not definitive proof, as some matrices with positive eigenvalues may still decrease the logits of certain tokens. Alternative methods, like analyzing the matrix diagonal, also point to copying but lack robustness. Despite its imperfections, the eigenvalue-based approach serves as a useful summary statistic for identifying copying behavior.

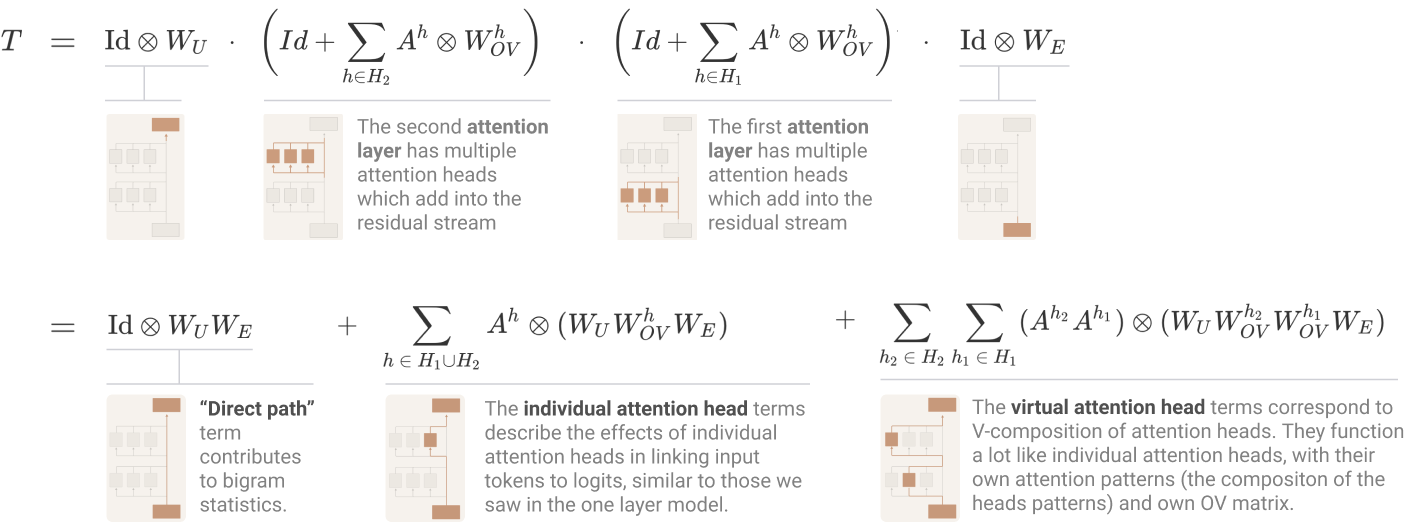

Two-Layer Attn-Only Transformer

Formulation

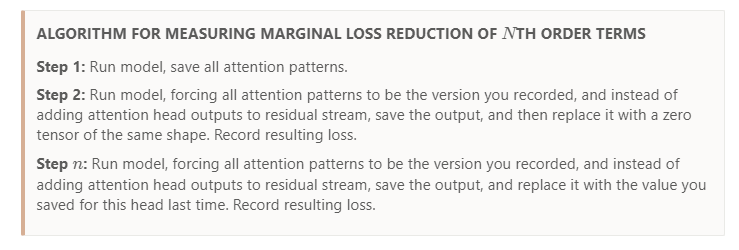

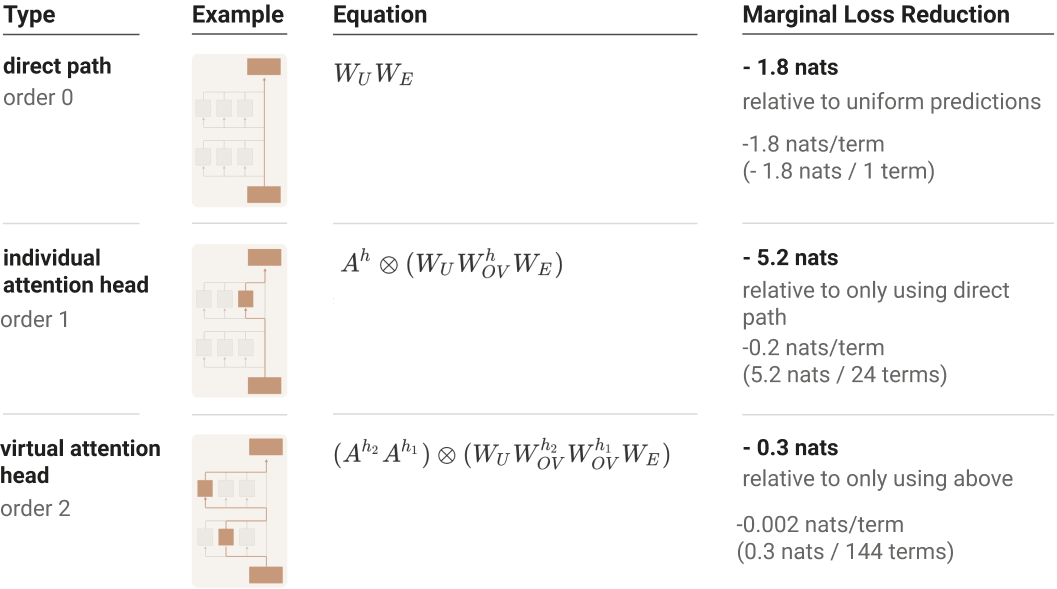

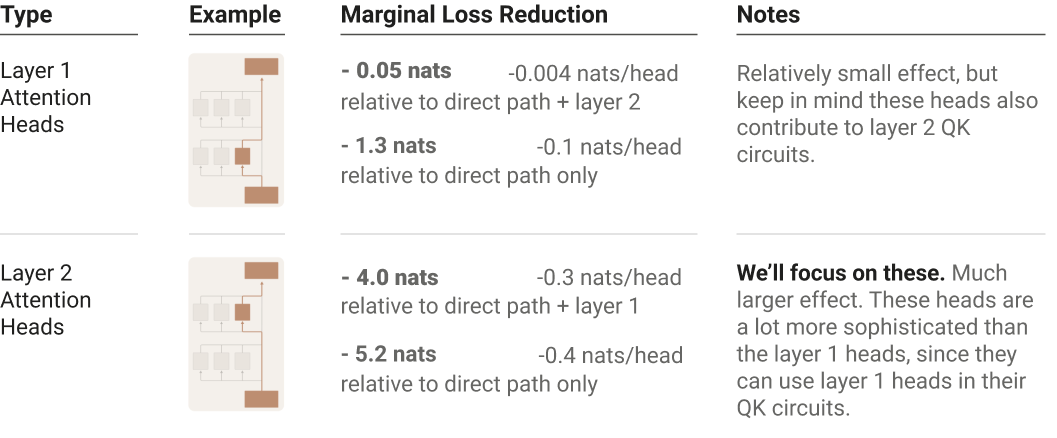

Term Importance Analysis

我们使用上面的方法分析二层 Transformer 公式中每一项的重要性

We conclude that for understanding two-layer attention only models, we shouldn’t prioritize understanding the second order

We can further subdivide these individual attention head terms into those in layer 1 and layer 2:

This suggests we should focus on the second layer head terms.

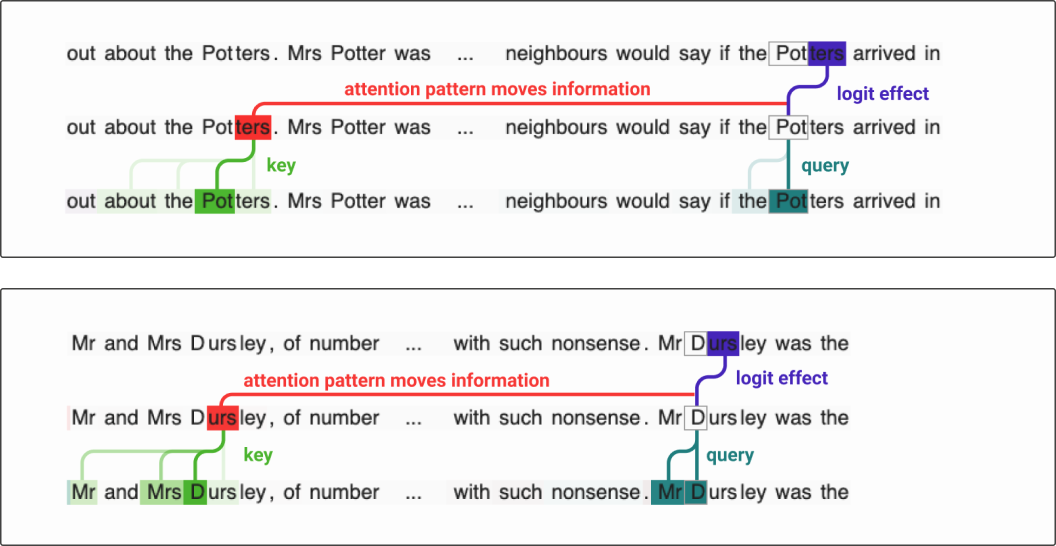

Induction Head

二层 Transformer 与 一层 Transformer 的最大区别在于

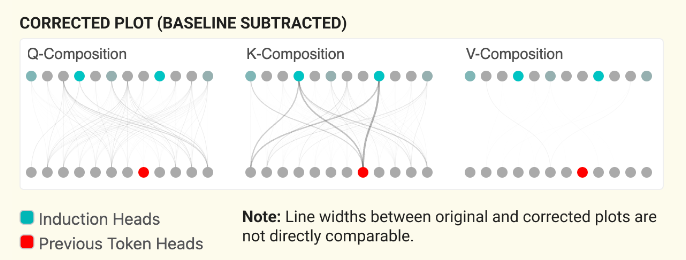

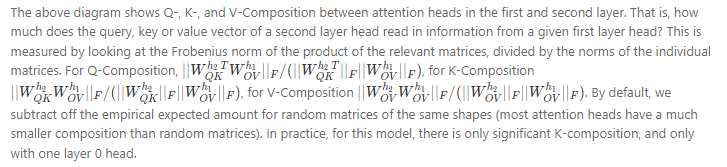

By dividing the Frobenius norm of the product of the matrices by the Frobenius norms of the individual matrices, we obtain a normalized measure that accounts for the sizes of the matrices involved. This normalization helps to compare the relative importance of different compositions. This ratio indicates how much the product deviates from what would be expected if the matrices were random, providing insight into the significance of the interaction.

我们看出 K-Composition Term 里有很显著的 pair

以第四行为例

然后

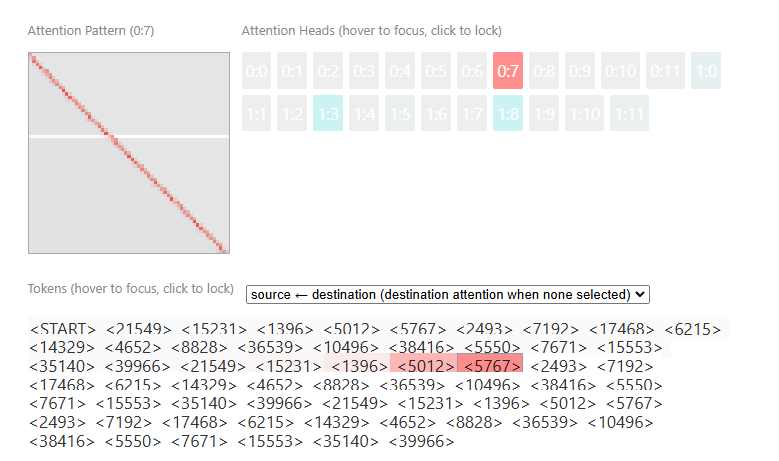

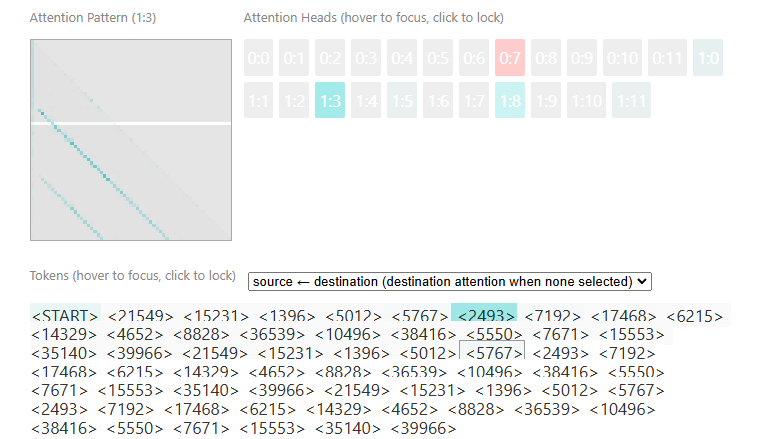

这两张图展示了 attention to <5767> 的 token

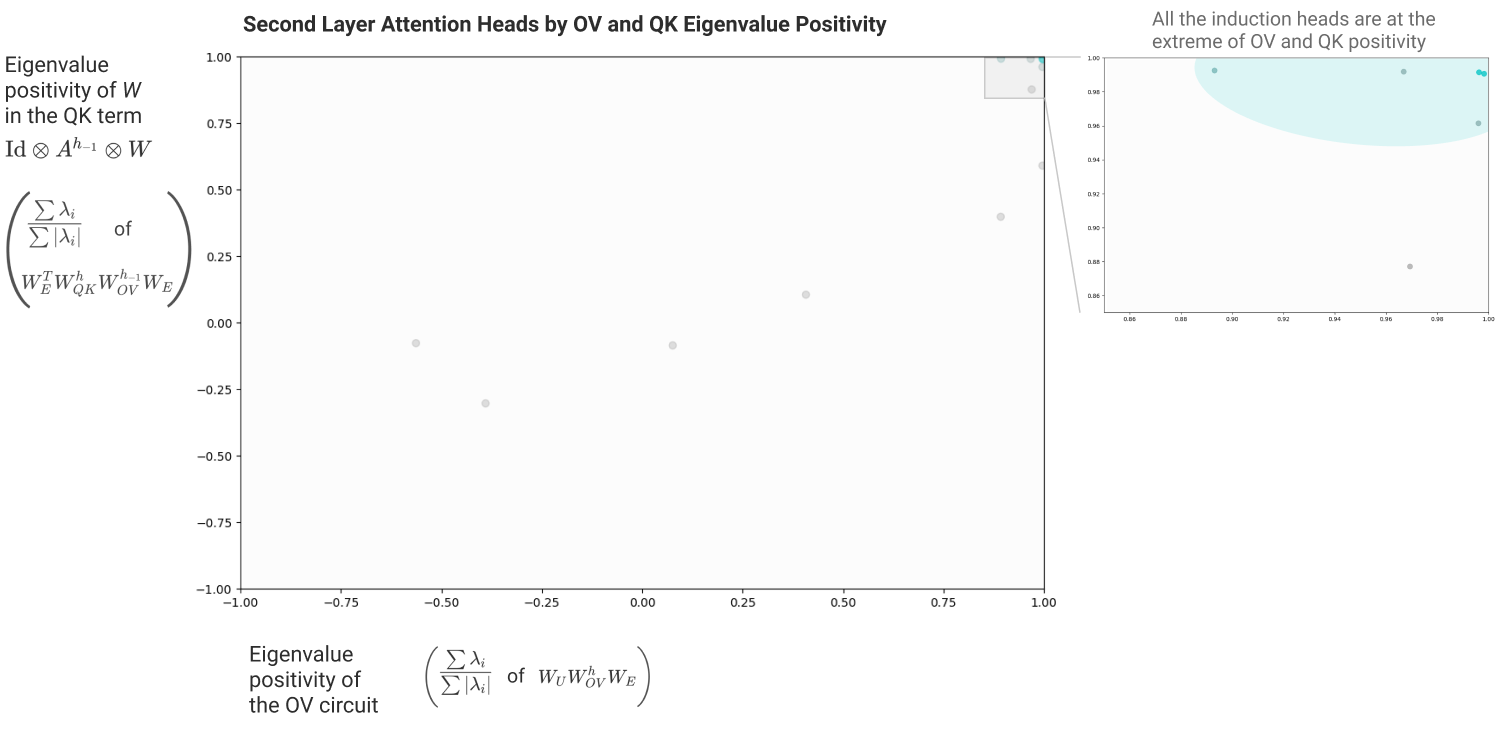

怎么找出 induction heads? Our mechanistic theory suggestions that induction heads must do two things:

- Have a “copying” OV circuit matrix (in previous layer).

- Have a “same matching” QK circuit matrix. (As the previous layer has transported information of the previous token into current hidden state.)